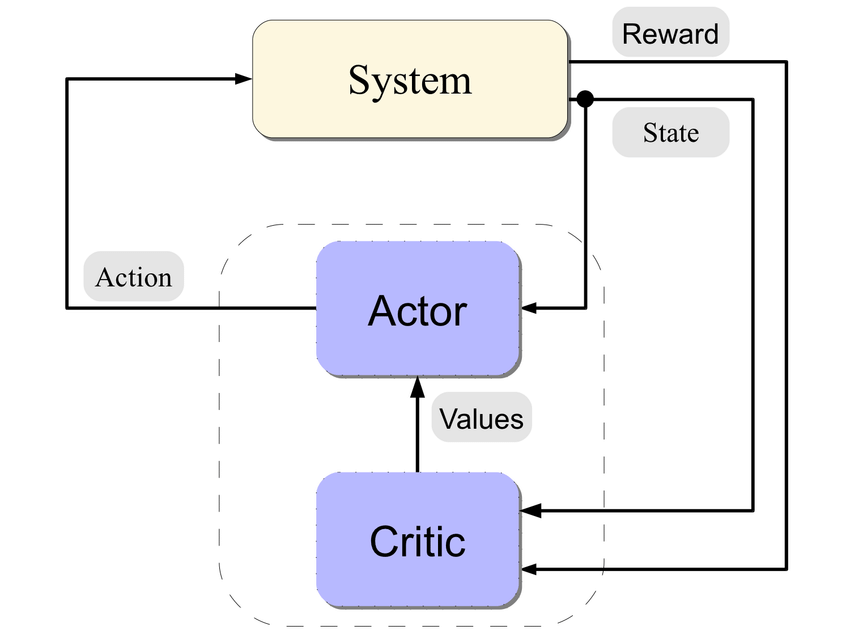

Advantage Actor-Critic (A2C) is a popular reinforcement learning algorithm that combines the benefits of both policy gradient methods and value-based methods. In A2C, an agent learns to maximize its rewards by interacting with an environment and receiving feedback in the form of rewards. The algorithm consists of two main components: the actor and the critic.

The actor is responsible for selecting actions based on the current state of the environment. It learns a policy that maps states to actions, aiming to maximize the expected cumulative reward over time. The critic, on the other hand, evaluates the actions taken by the actor by estimating the value function. The value function represents the expected cumulative reward that can be obtained from a given state following a specific policy.

One of the key advantages of A2C is its ability to update the policy and value function simultaneously, leading to faster and more stable learning. This is achieved by using the advantage function, which measures how much better an action is compared to the average action taken in a given state. By incorporating the advantage function into the update process, A2C can effectively reduce the variance of the policy gradient estimates and improve the convergence of the algorithm.

Another advantage of A2C is its scalability and efficiency in handling large-scale environments with high-dimensional state and action spaces. The algorithm can be parallelized to run multiple agents in parallel, allowing for more efficient exploration of the environment and faster learning. This makes A2C particularly well-suited for complex tasks such as playing video games or controlling robotic systems.

Furthermore, A2C is known for its simplicity and ease of implementation compared to other reinforcement learning algorithms. It does not require complex architectures or hyperparameter tuning, making it accessible to both beginners and experienced practitioners in the field of AI.

In conclusion, Advantage Actor-Critic (A2C) is a powerful reinforcement learning algorithm that combines the strengths of policy gradient and value-based methods. Its ability to update the policy and value function simultaneously, scalability, efficiency, and simplicity make it a popular choice for training agents to perform complex tasks in various environments.

1. Improved Training Efficiency: Advantage Actor-Critic (A2C) is a reinforcement learning algorithm that combines the benefits of both actor-critic methods and advantage functions. This results in more efficient training compared to traditional methods.

2. Faster Convergence: A2C allows for faster convergence during training by using the advantage function to estimate the quality of actions taken by the policy. This helps the algorithm learn more quickly and effectively.

3. Better Exploration: The advantage function in A2C helps the algorithm explore the environment more effectively by providing a measure of how good or bad an action is relative to the current policy. This leads to more optimal decision-making.

4. Increased Stability: A2C offers increased stability during training by updating the policy based on the advantage function, which helps prevent large policy updates that can lead to instability in the learning process.

5. Enhanced Performance: Overall, Advantage Actor-Critic (A2C) plays a significant role in AI by improving training efficiency, speeding up convergence, promoting better exploration, increasing stability, and ultimately enhancing the performance of reinforcement learning algorithms.

1. Video Game AI: A2C algorithms are commonly used in video game AI to train agents to play games more efficiently and effectively, by learning from their actions and rewards in real-time.

2. Robotics: A2C algorithms can be applied in robotics to improve the performance of robotic systems, such as autonomous vehicles or industrial robots, by optimizing their decision-making processes based on feedback from the environment.

3. Natural Language Processing: A2C algorithms can be used in natural language processing tasks, such as text generation or sentiment analysis, to enhance the accuracy and speed of language-based AI models.

4. Financial Trading: A2C algorithms are utilized in financial trading to develop AI systems that can make faster and more informed decisions in buying and selling assets, based on market trends and historical data.

5. Healthcare: A2C algorithms can be applied in healthcare to assist in medical diagnosis, treatment planning, and patient monitoring, by analyzing large amounts of medical data and providing recommendations to healthcare professionals.

There are no results matching your search.

ResetThere are no results matching your search.

Reset