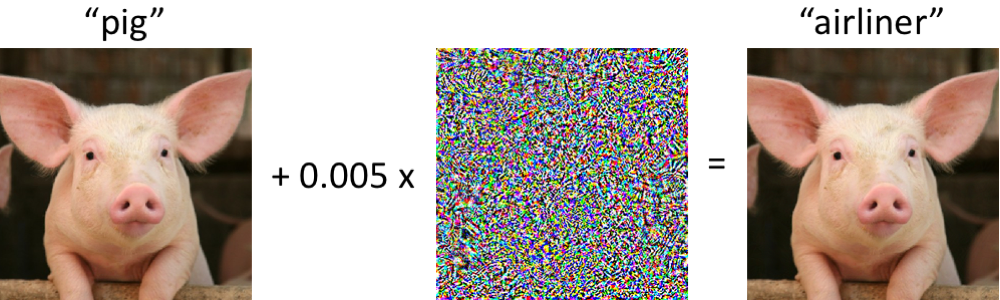

Adversarial examples are inputs to machine learning models that are intentionally designed to deceive the model into making incorrect predictions. These examples are crafted by making small, imperceptible changes to the input data, such as adding noise or altering pixels, in order to cause the model to misclassify the input. Adversarial examples are a significant concern in the field of artificial intelligence (AI) as they can undermine the reliability and security of machine learning systems.

One of the key characteristics of adversarial examples is their ability to fool even the most advanced machine learning models. These examples can cause a model to misclassify an image of a cat as a dog, or a stop sign as a speed limit sign, with high confidence. This poses a serious threat to the deployment of AI systems in critical applications such as autonomous vehicles, medical diagnosis, and cybersecurity.

The existence of adversarial examples highlights the limitations of current machine learning algorithms, which are vulnerable to small perturbations in the input data. Researchers have been studying adversarial examples to better understand why they occur and how to defend against them. One approach is to train models with adversarial examples during the training process, in order to make them more robust to such attacks. Another approach is to develop adversarial detection techniques that can identify when a model is being fooled by an adversarial example.

Adversarial examples have also raised ethical concerns in the AI community. The ability to manipulate machine learning models with imperceptible changes to the input data raises questions about the trustworthiness of AI systems and their potential impact on society. As AI systems become more integrated into everyday life, it is crucial to address the vulnerabilities posed by adversarial examples and ensure that these systems are secure and reliable.

In conclusion, adversarial examples are a challenging and important area of research in the field of artificial intelligence. By understanding the nature of these examples and developing strategies to defend against them, we can improve the robustness and trustworthiness of machine learning systems in a wide range of applications.

1. Adversarial examples are crucial in AI as they highlight vulnerabilities in machine learning models, helping researchers improve the robustness and security of AI systems.

2. Understanding adversarial examples is essential for developing AI algorithms that can accurately classify and make decisions in the presence of noisy or misleading data.

3. By studying adversarial examples, AI practitioners can enhance the interpretability of machine learning models and ensure they are not easily fooled by malicious inputs.

4. Adversarial examples play a key role in advancing the field of adversarial machine learning, which focuses on defending AI systems against potential attacks and ensuring their reliability in real-world scenarios.

5. Overall, the study of adversarial examples is critical for the continued progress and adoption of AI technologies across various industries, as it helps build trust in the capabilities and performance of machine learning algorithms.

1. Adversarial examples can be used in testing the robustness of AI models by intentionally introducing small perturbations to input data to see if the model can still accurately classify the data.

2. Adversarial examples can be used in improving the security of AI systems by identifying vulnerabilities and weaknesses in the model’s decision-making process.

3. Adversarial examples can be used in training AI models to be more resilient to attacks and adversarial inputs, leading to more reliable and accurate predictions.

4. Adversarial examples can be used in research to better understand how AI models make decisions and to develop strategies for mitigating the impact of adversarial attacks.

5. Adversarial examples can be used in the development of AI-based security systems to detect and prevent malicious attacks on sensitive data and systems.

There are no results matching your search.

ResetThere are no results matching your search.

Reset