Adversarial examples in generative models refer to instances where a small, carefully crafted perturbation to the input data can lead to significant changes in the output generated by the model. This phenomenon has been widely studied in the field of artificial intelligence (AI) and has important implications for the security and robustness of generative models.

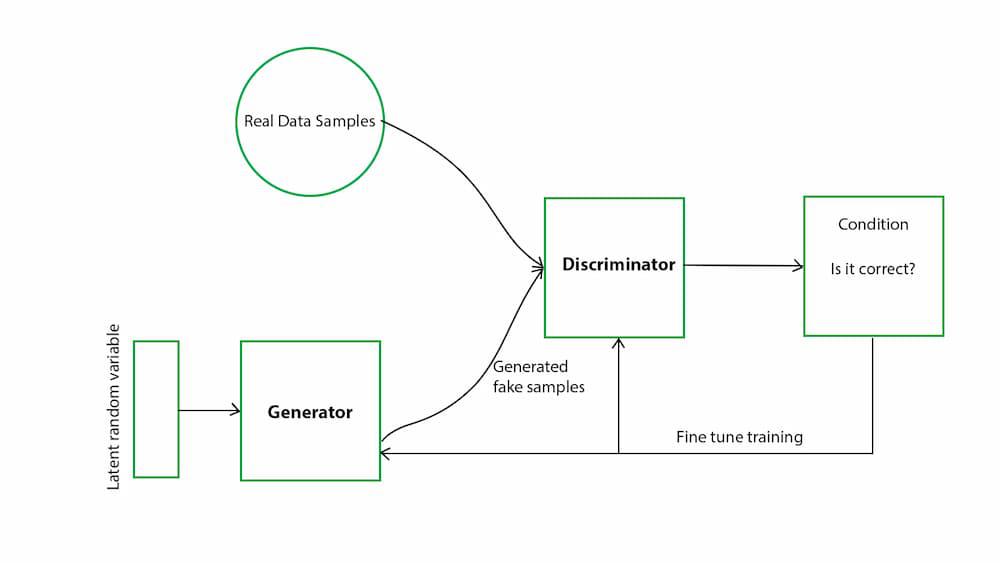

Generative models are a class of AI algorithms that learn to generate new data samples that are similar to a given dataset. These models are commonly used in tasks such as image generation, text generation, and music composition. Adversarial examples in generative models pose a unique challenge because they can lead to unexpected and potentially harmful outputs that deviate significantly from the intended distribution of the data.

One of the key characteristics of adversarial examples in generative models is their ability to fool the model into generating outputs that are visually or semantically different from the original input. For example, in the context of image generation, a small perturbation to an input image of a cat could result in the model generating an output that appears to be a completely different object, such as a dog or a bird. This can have serious consequences in applications where the generated outputs are used for decision-making, such as in autonomous vehicles or medical imaging.

The existence of adversarial examples in generative models highlights the vulnerability of these models to attacks that exploit their underlying vulnerabilities. These attacks can be categorized into two main types: targeted attacks, where the adversary aims to generate a specific output, and untargeted attacks, where the goal is to generate any output that is different from the original input. Adversarial examples can be generated using various techniques, such as gradient-based optimization, evolutionary algorithms, or black-box attacks, where the adversary has limited access to the model’s parameters.

The study of adversarial examples in generative models has led to the development of various defense mechanisms to improve the robustness of these models against such attacks. One common approach is to train the model with adversarial examples during the training process, a technique known as adversarial training. This helps the model learn to generate outputs that are resilient to small perturbations in the input data. Other defense mechanisms include input preprocessing techniques, such as input denoising or smoothing, and model regularization techniques, such as adding noise to the model’s parameters.

In conclusion, adversarial examples in generative models are a critical issue in the field of AI that poses significant challenges to the security and robustness of these models. Understanding the underlying mechanisms of adversarial attacks and developing effective defense mechanisms are essential for ensuring the reliability and trustworthiness of generative models in real-world applications. Further research in this area is needed to address the evolving threats posed by adversarial examples and to advance the field of AI towards more secure and resilient generative models.

1. Adversarial examples in generative models can help researchers better understand the vulnerabilities and limitations of AI systems.

2. They can be used to evaluate the robustness and generalization capabilities of generative models.

3. Adversarial examples can be used to improve the performance of generative models by training them to be more resilient to adversarial attacks.

4. They can also be used to enhance the security of AI systems by identifying and mitigating potential vulnerabilities.

5. Adversarial examples in generative models can be used to generate synthetic data for training and testing purposes.

6. They can be used to study the impact of adversarial attacks on the performance of generative models in real-world scenarios.

7. Adversarial examples can be used to improve the interpretability and explainability of generative models by identifying the features that are most vulnerable to adversarial attacks.

1. Image generation: Adversarial examples can be used in generative models to create realistic images that are visually similar to the original input but contain imperceptible changes that can fool the model.

2. Data augmentation: Adversarial examples can be used to generate additional training data for generative models, improving their performance and robustness.

3. Defense mechanisms: Adversarial examples can be used to test the robustness of generative models and develop defense mechanisms to protect against adversarial attacks.

4. Transfer learning: Adversarial examples can be used to transfer knowledge from one generative model to another, improving the performance of the target model.

5. Anomaly detection: Adversarial examples can be used to detect anomalies in generative models, helping to identify potential security threats or errors in the model’s output.

There are no results matching your search.

ResetThere are no results matching your search.

Reset