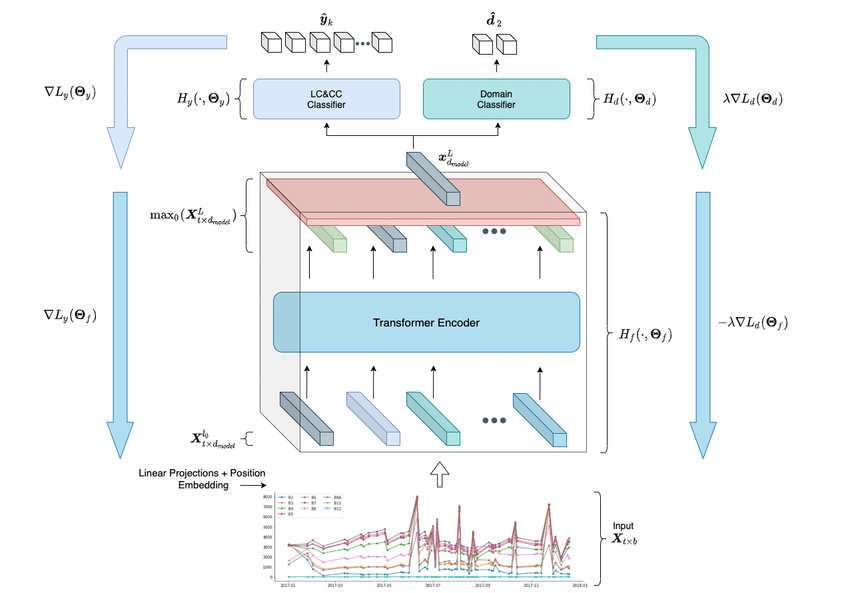

Adversarial training with transformers is a technique used in the field of artificial intelligence (AI) to improve the robustness and generalization of transformer models. Transformers are a type of neural network architecture that has been widely used in natural language processing (NLP) tasks such as machine translation, text generation, and sentiment analysis. However, like all machine learning models, transformers are susceptible to adversarial attacks, where small, carefully crafted perturbations to the input data can cause the model to make incorrect predictions.

Adversarial training is a method for defending against adversarial attacks by training the model on adversarially perturbed examples in addition to the original training data. This helps the model learn to be more robust to these attacks and improves its generalization performance. Adversarial training with transformers specifically focuses on applying this technique to transformer models, which have shown impressive performance on a wide range of NLP tasks but are also known to be vulnerable to adversarial attacks.

The basic idea behind adversarial training with transformers is to generate adversarial examples by perturbing the input data in a way that maximizes the model’s loss function. These adversarial examples are then used to train the transformer model alongside the original training data. By exposing the model to these adversarially perturbed examples during training, the model learns to be more robust to similar attacks at test time.

There are several different approaches to adversarial training with transformers, including using different types of perturbations (e.g., adding noise, changing individual tokens, or modifying the structure of the input) and different training strategies (e.g., fine-tuning the model on adversarial examples or training it from scratch). Researchers have also explored the use of adversarial training with transformers in combination with other techniques, such as data augmentation, regularization, and ensemble methods, to further improve the model’s robustness and generalization performance.

One of the key challenges in adversarial training with transformers is finding a balance between improving the model’s robustness to adversarial attacks and maintaining its performance on clean, non-adversarial data. Training the model on too many adversarially perturbed examples can lead to overfitting and a decrease in performance on the original task, while training it on too few adversarial examples may not be sufficient to improve its robustness. Researchers are actively exploring ways to address this trade-off and develop more effective adversarial training techniques for transformers.

Overall, adversarial training with transformers is an important area of research in AI that aims to enhance the security and reliability of transformer models in real-world applications. By incorporating adversarial examples into the training process, researchers can help ensure that transformer models are more resilient to attacks and better able to generalize to new, unseen data. This can ultimately lead to more trustworthy and dependable AI systems that can be deployed in a wide range of practical settings.

1. Improved robustness: Adversarial training with transformers can help improve the robustness of AI models by exposing them to adversarial examples during training, making them more resistant to attacks.

2. Enhanced performance: This technique can also lead to enhanced performance of AI models by forcing them to learn more generalizable features and patterns.

3. Better generalization: Adversarial training with transformers can help AI models generalize better to unseen data by learning to recognize and adapt to various perturbations.

4. Increased security: By training AI models with adversarial examples, organizations can enhance the security of their systems and protect against potential attacks.

5. Mitigation of bias: Adversarial training with transformers can help mitigate bias in AI models by exposing them to diverse and challenging examples, leading to more fair and unbiased decision-making.

6. Advancement in AI research: This technique has the potential to advance the field of AI research by pushing the boundaries of what AI models can learn and achieve.

1. Natural language processing: Adversarial training with transformers can be used to improve the performance of language models in tasks such as text generation, machine translation, and sentiment analysis.

2. Computer vision: Adversarial training with transformers can be applied to improve the robustness of image recognition models against adversarial attacks.

3. Speech recognition: Adversarial training with transformers can help improve the accuracy of speech recognition systems by making them more resilient to adversarial perturbations.

4. Anomaly detection: Adversarial training with transformers can be used to detect anomalies in data by training models to distinguish between normal and abnormal patterns.

5. Cybersecurity: Adversarial training with transformers can be used to enhance the security of AI systems by making them more resistant to adversarial attacks and malicious inputs.

There are no results matching your search.

ResetThere are no results matching your search.

Reset