Adversarial attacks on object detection models refer to a type of malicious manipulation of input data that is specifically designed to deceive or mislead an object detection model. Object detection models are a type of artificial intelligence (AI) system that is trained to identify and locate objects within an image or video. These models are commonly used in various applications such as autonomous vehicles, surveillance systems, and medical imaging.

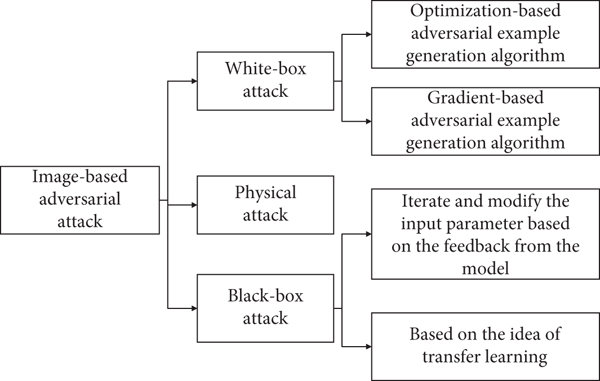

Adversarial attacks on object detection models can take many forms, but they generally involve making small, imperceptible changes to the input data in order to cause the model to misclassify or fail to detect objects in the image. These attacks are often crafted using sophisticated algorithms that exploit vulnerabilities in the model’s architecture or training data.

One common type of adversarial attack on object detection models is the addition of noise or perturbations to the input image. By carefully crafting these perturbations, an attacker can cause the model to misclassify objects or fail to detect them altogether. Another type of attack involves the manipulation of the model’s input data in a way that is specifically designed to exploit weaknesses in the model’s decision-making process.

Adversarial attacks on object detection models pose a significant threat to the reliability and security of AI systems. In applications such as autonomous vehicles, for example, a successful attack could potentially lead to dangerous situations on the road. In surveillance systems, an attacker could potentially evade detection by manipulating the input data in a way that causes the model to fail to detect certain objects.

Researchers and practitioners in the field of AI are actively working to develop defenses against adversarial attacks on object detection models. One approach is to train models using adversarial examples, which are specifically crafted to expose vulnerabilities in the model’s decision-making process. By incorporating these examples into the training data, researchers hope to improve the model’s robustness to adversarial attacks.

Another approach is to develop detection mechanisms that can identify when an input image has been manipulated in a way that is likely to deceive the model. These detection mechanisms can then be used to filter out potentially malicious input data before it is processed by the model.

Overall, adversarial attacks on object detection models represent a significant challenge for the field of AI. As AI systems become increasingly integrated into our daily lives, it is crucial that researchers and practitioners continue to develop robust defenses against these attacks in order to ensure the reliability and security of these systems.

1. Adversarial attacks can help researchers understand the vulnerabilities of object detection models.

2. Adversarial attacks can be used to evaluate the robustness of object detection models.

3. Adversarial attacks can be used to improve the security of object detection models.

4. Adversarial attacks can be used to generate adversarial examples for testing and training object detection models.

5. Adversarial attacks can be used to study the generalization capabilities of object detection models.

1. Adversarial image generation

2. Adversarial training of object detection models

3. Evaluation of robustness of object detection models against adversarial attacks

4. Development of defense mechanisms against adversarial attacks on object detection models

There are no results matching your search

There are no results matching your search