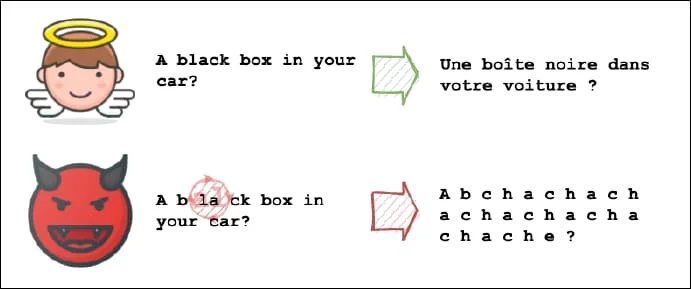

Adversarial examples in the context of artificial intelligence, specifically in natural language processing (NLP), refer to inputs that are intentionally designed to deceive machine learning models. These inputs are crafted with the goal of causing the model to make incorrect predictions or classifications. Adversarial examples exploit vulnerabilities in the model’s decision-making process, often by making small, imperceptible changes to the input data that result in significant changes to the model’s output.

The concept of adversarial examples was first introduced in the field of computer vision, where researchers discovered that adding imperceptible noise to an image could cause a state-of-the-art image classification model to misclassify the image. Since then, adversarial examples have been studied in various domains of AI, including NLP.

In NLP, adversarial examples can take many forms, such as adding or removing words from a sentence, changing the order of words, or replacing words with synonyms. These subtle modifications can be enough to fool a model into making incorrect predictions. For example, a sentence like “The cat sat on the mat” could be transformed into “The cat sat on the rat” by changing just one word, leading the model to make a completely different inference.

The existence of adversarial examples poses a significant challenge to the robustness and reliability of AI systems. If a model can be easily fooled by small changes to the input data, it may not be suitable for real-world applications where security and accuracy are critical. Adversarial examples also raise questions about the interpretability of AI models, as they highlight the limitations of current approaches to understanding and explaining the decisions made by these models.

Researchers have proposed various techniques to defend against adversarial examples in NLP, such as adversarial training, which involves training the model on a mixture of clean and adversarial examples to improve its robustness. Other approaches include using adversarial detection methods to identify and filter out adversarial inputs before they can affect the model’s predictions.

Despite these efforts, the problem of adversarial examples remains an active area of research in AI. As models become more complex and powerful, the potential for adversarial attacks also increases. Addressing this challenge will require a combination of technical solutions, ethical considerations, and regulatory frameworks to ensure the responsible development and deployment of AI systems in the future.

1. Adversarial examples can help researchers better understand the vulnerabilities of machine learning models in NLP tasks.

2. They can be used to evaluate the robustness and generalization capabilities of NLP models.

3. Adversarial examples can be used to improve the security of NLP systems by identifying and addressing potential weaknesses.

4. They can also be used to enhance the performance of NLP models by training them to be more resilient to adversarial attacks.

5. Adversarial examples can provide insights into the limitations of current NLP algorithms and guide the development of more robust and reliable models.

1. Text classification

2. Sentiment analysis

3. Machine translation

4. Named entity recognition

5. Text generation

6. Question answering

7. Text summarization

8. Language modeling

There are no results matching your search.

ResetThere are no results matching your search.

Reset