Adversarial Logit Pairing is a technique used in the field of artificial intelligence (AI) to improve the robustness and generalization of machine learning models, particularly in the context of adversarial attacks. Adversarial attacks refer to the deliberate manipulation of input data to fool a machine learning model into making incorrect predictions or classifications. These attacks can have serious consequences, such as causing autonomous vehicles to misinterpret road signs or leading to misclassification of medical images.

The goal of Adversarial Logit Pairing is to enhance the resilience of a machine learning model against such attacks by encouraging the model to learn more robust and generalizable features. This is achieved by training the model to not only predict the correct class label for a given input sample, but also to predict the class labels of adversarial perturbations of that sample. Adversarial perturbations are small, carefully crafted changes to the input data that are designed to cause the model to make incorrect predictions.

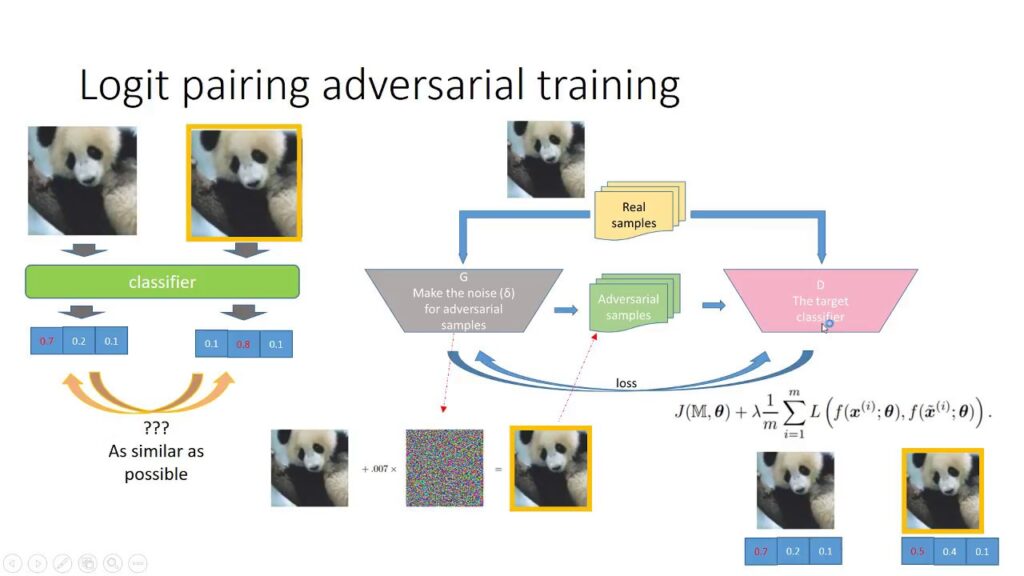

The key idea behind Adversarial Logit Pairing is to leverage the logits, or the raw output values of the model before they are converted into probabilities through a softmax function. By comparing the logits of the original input sample with the logits of its adversarial perturbation, the model can learn to distinguish between genuine and adversarial examples. This helps the model to generalize better to unseen data and to be more robust against adversarial attacks.

One of the main advantages of Adversarial Logit Pairing is that it does not require any additional data or labels for training. Instead, it leverages the existing training data and introduces a regularization term in the loss function to encourage the model to learn more robust features. This makes it a cost-effective and efficient technique for improving the security and reliability of machine learning models.

Adversarial Logit Pairing has been successfully applied in various domains, including computer vision, natural language processing, and speech recognition. In computer vision, for example, it has been used to improve the robustness of image classification models against adversarial attacks. By training the model to predict the class labels of both original and adversarial images, Adversarial Logit Pairing helps the model to learn more discriminative features and to make more accurate predictions.

In conclusion, Adversarial Logit Pairing is a powerful technique for enhancing the robustness and generalization of machine learning models in the face of adversarial attacks. By leveraging the logits of the model and training it to predict the class labels of both original and adversarial examples, Adversarial Logit Pairing helps to improve the security and reliability of AI systems. This technique has the potential to make machine learning models more resilient to attacks and to increase their trustworthiness in real-world applications.

1. Enhances the robustness of machine learning models against adversarial attacks

2. Improves the generalization ability of AI systems

3. Helps in detecting and mitigating adversarial examples

4. Contributes to the development of more secure and reliable AI applications

5. Facilitates the advancement of adversarial defense techniques in the field of artificial intelligence.

1. Adversarial training in neural networks

2. Improving the robustness of machine learning models

3. Generating adversarial examples for testing model performance

4. Enhancing the security of AI systems

5. Preventing adversarial attacks on AI models

There are no results matching your search

There are no results matching your search