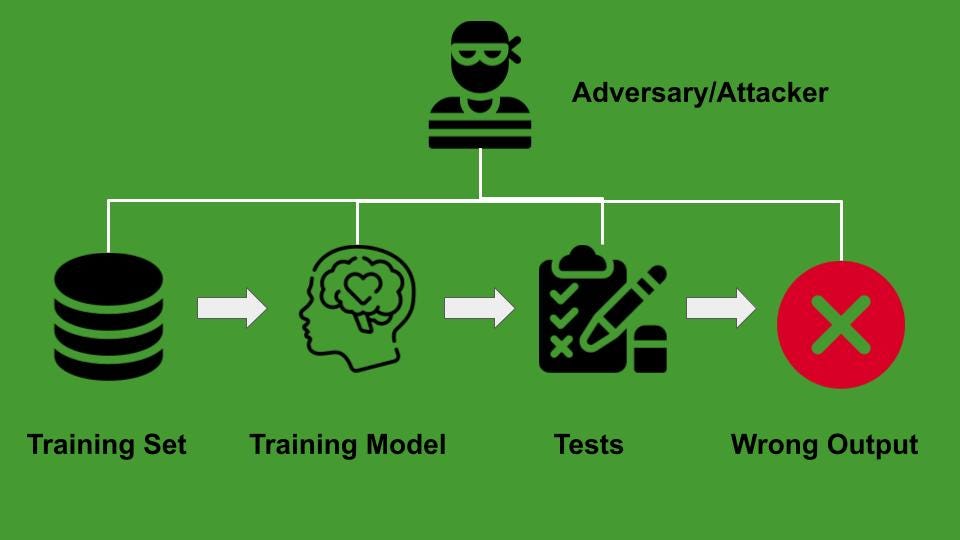

Adversarial testing is a technique used in the field of artificial intelligence (AI) to evaluate the robustness and security of machine learning models. It involves deliberately crafting malicious inputs, known as adversarial examples, to test the model’s performance under different conditions and to identify vulnerabilities that could be exploited by attackers.

Adversarial examples are inputs that are intentionally designed to deceive a machine learning model into making incorrect predictions or classifications. These inputs are often generated by making small, imperceptible changes to legitimate data points, such as images or text, in order to trick the model into misclassifying them. Adversarial examples can be used to test the resilience of a model against various types of attacks, including evasion attacks, poisoning attacks, and model inversion attacks.

Evasion attacks, also known as adversarial perturbations, involve modifying the input data in such a way that the model produces an incorrect output. This type of attack is particularly concerning in security-critical applications, such as autonomous vehicles or medical diagnosis systems, where a misclassification could have serious consequences. Adversarial testing helps to identify vulnerabilities in the model’s decision-making process and to develop defenses against evasion attacks.

Poisoning attacks, on the other hand, involve injecting malicious data points into the training dataset in order to manipulate the model’s behavior. By introducing subtle changes to the training data, an attacker can influence the model’s learning process and cause it to make incorrect predictions on new, unseen data. Adversarial testing can help to detect and mitigate the effects of poisoning attacks by evaluating the model’s performance on contaminated datasets and identifying patterns of misclassification.

Model inversion attacks, also known as membership inference attacks, involve inferring sensitive information about the training data used to train a machine learning model. By observing the model’s outputs and analyzing its behavior, an attacker can reverse-engineer the training data and extract confidential information about the individuals or entities represented in the dataset. Adversarial testing can help to assess the privacy risks associated with model inversion attacks and to develop defenses against unauthorized data disclosure.

Overall, adversarial testing plays a crucial role in evaluating the security and reliability of machine learning models in real-world applications. By simulating various types of attacks and testing the model’s response to adversarial inputs, researchers and practitioners can gain insights into the model’s vulnerabilities and limitations. This information can be used to improve the model’s robustness, enhance its defenses against adversarial attacks, and ensure its safe deployment in critical systems.

1. Identifying vulnerabilities in AI systems: Adversarial testing helps in uncovering weaknesses and vulnerabilities in AI systems that may not be apparent through traditional testing methods.

2. Improving robustness and reliability: By subjecting AI systems to adversarial testing, developers can enhance the robustness and reliability of the system by identifying and addressing potential weaknesses.

3. Enhancing security: Adversarial testing helps in identifying potential security threats and vulnerabilities in AI systems, allowing developers to implement necessary security measures to protect against attacks.

4. Enhancing performance: By testing AI systems under adversarial conditions, developers can improve the performance of the system by identifying and addressing potential weaknesses that may impact its functionality.

5. Ensuring fairness and accountability: Adversarial testing can help in ensuring that AI systems are fair and accountable by identifying and addressing biases and discriminatory behaviors that may be present in the system.

6. Enhancing trust and transparency: By subjecting AI systems to adversarial testing, developers can enhance trust and transparency in the system by demonstrating its ability to withstand adversarial attacks and maintain its functionality under challenging conditions.

1. Adversarial machine learning

2. Cybersecurity

3. Natural language processing

4. Image recognition

5. Fraud detection

6. Autonomous vehicles

7. Malware detection

8. Network security

9. Anomaly detection

10. Speech recognition

There are no results matching your search.

ResetThere are no results matching your search.

Reset