Adversarial training for text generation models is a technique used in the field of artificial intelligence (AI) to improve the robustness and performance of natural language processing (NLP) models. This approach involves training a text generation model to generate more accurate and coherent text by exposing it to adversarial examples during the training process.

Adversarial examples are inputs that are intentionally designed to deceive a machine learning model. In the context of text generation, adversarial examples can be sentences or phrases that are slightly modified to confuse the model and produce incorrect or nonsensical output. By incorporating adversarial examples into the training data, the text generation model can learn to better handle these types of inputs and generate more accurate and coherent text.

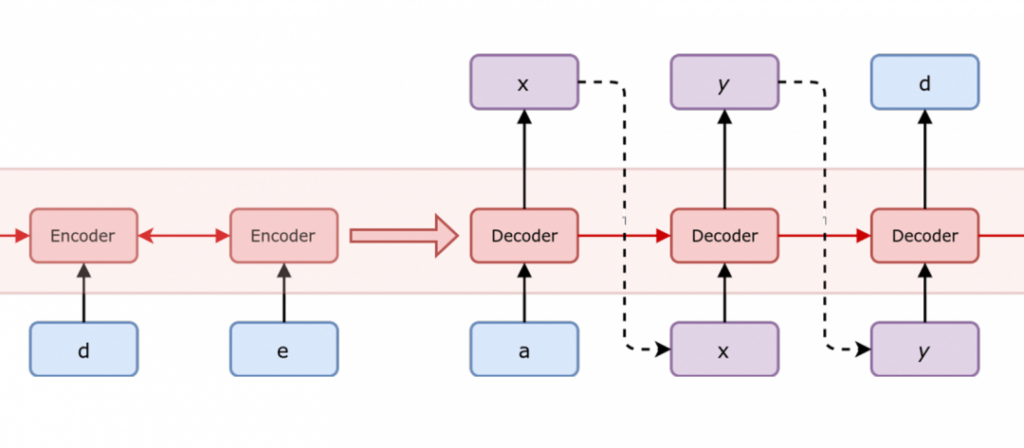

The goal of adversarial training for text generation models is to improve the model’s ability to generate text that is both fluent and semantically meaningful. This can be particularly challenging in NLP tasks such as machine translation, text summarization, and dialogue generation, where the model must understand and generate human-like text.

One of the key benefits of adversarial training is that it can help to improve the generalization capabilities of text generation models. By exposing the model to a diverse range of inputs, including adversarial examples, during training, the model can learn to better handle variations in the input data and produce more robust and accurate output.

There are several different approaches to adversarial training for text generation models, including using adversarial examples generated by other models, incorporating adversarial training objectives into the model’s loss function, and fine-tuning the model on a combination of clean and adversarial data. Each of these approaches has its own advantages and limitations, and the choice of method will depend on the specific requirements of the text generation task.

Overall, adversarial training for text generation models is an important technique in the field of AI that can help to improve the performance and robustness of NLP models. By exposing the model to adversarial examples during training, researchers and developers can help to ensure that text generation models are better equipped to handle a wide range of inputs and produce more accurate and coherent text.

1. Improved robustness: Adversarial training helps text generation models become more robust against adversarial attacks and input perturbations.

2. Enhanced performance: By training on adversarial examples, text generation models can achieve better performance on challenging tasks.

3. Increased security: Adversarial training can help improve the security of text generation models by making them less vulnerable to attacks.

4. Better generalization: Training on adversarial examples can help text generation models generalize better to unseen data and improve their overall performance.

5. Mitigation of bias: Adversarial training can help mitigate biases in text generation models by exposing them to diverse and challenging examples.

6. Improved interpretability: By training on adversarial examples, text generation models may become more interpretable and transparent in their decision-making processes.

1. Natural language processing

2. Text generation

3. Sentiment analysis

4. Machine translation

5. Chatbots

6. Speech recognition

7. Image recognition

8. Fraud detection

9. Cybersecurity

10. Autonomous vehicles

There are no results matching your search.

ResetThere are no results matching your search.

Reset