Adversarial training with proximal gradient descent is a technique used in the field of artificial intelligence (AI) to improve the robustness and generalization of machine learning models, particularly in the context of adversarial attacks. Adversarial attacks are a type of attack where an adversary intentionally manipulates input data to deceive a machine learning model into making incorrect predictions. These attacks can be particularly harmful in applications such as image recognition, natural language processing, and autonomous driving, where the consequences of misclassification can be severe.

Adversarial training is a method that aims to make machine learning models more resilient to adversarial attacks by exposing them to adversarially perturbed examples during training. This helps the model learn to generalize better and make more accurate predictions on unseen data, including adversarial examples. Proximal gradient descent is an optimization algorithm commonly used in machine learning to update the parameters of a model in order to minimize a loss function. By combining adversarial training with proximal gradient descent, researchers have been able to develop more robust and secure machine learning models that are less susceptible to adversarial attacks.

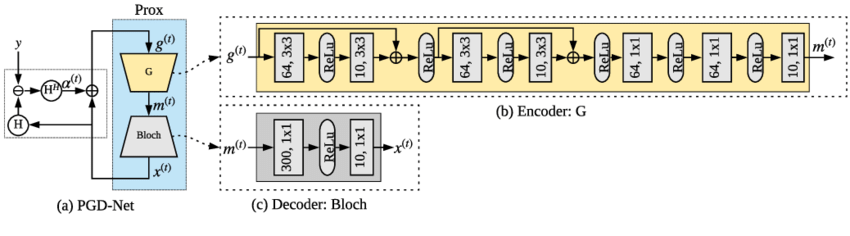

The key idea behind adversarial training with proximal gradient descent is to incorporate adversarial examples into the training process in a way that encourages the model to learn features that are robust to small perturbations in the input data. This is achieved by generating adversarial examples using techniques such as the Fast Gradient Sign Method (FGSM) or the Projected Gradient Descent (PGD) and adding them to the training data. During training, the model is trained to minimize a loss function that penalizes both the standard prediction error and the error on the adversarial examples. This encourages the model to learn features that are invariant to small changes in the input data, making it more robust to adversarial attacks.

Proximal gradient descent is used to update the parameters of the model during training in a way that balances the trade-off between minimizing the loss function and maintaining the robustness of the model. The proximal operator is used to enforce constraints on the parameters, such as sparsity or smoothness, which can help prevent overfitting and improve the generalization of the model. By combining adversarial training with proximal gradient descent, researchers have been able to develop state-of-the-art machine learning models that are more robust to adversarial attacks and achieve better performance on a wide range of tasks.

In conclusion, adversarial training with proximal gradient descent is a powerful technique in the field of AI that can help improve the robustness and generalization of machine learning models in the face of adversarial attacks. By incorporating adversarial examples into the training process and using proximal gradient descent to update the parameters of the model, researchers have been able to develop more secure and reliable machine learning models that can better handle real-world scenarios. This technique has the potential to significantly advance the field of AI and make machine learning systems more trustworthy and resilient in the face of evolving threats.

1. Improved robustness of AI models against adversarial attacks

2. Enhanced generalization capabilities of AI models

3. Increased accuracy and reliability of AI models

4. Better performance on real-world data and scenarios

5. Potential for more efficient and effective AI systems

6. Advancement in the field of AI research and development

7. Potential for applications in various industries such as cybersecurity, healthcare, finance, and more.

1. Image recognition and classification

2. Natural language processing

3. Fraud detection in financial transactions

4. Cybersecurity and network intrusion detection

5. Autonomous vehicles and robotics

6. Healthcare diagnostics and personalized medicine

7. Video game AI and player behavior prediction

8. Recommendation systems for e-commerce and content platforms

9. Anomaly detection in industrial processes

10. Sentiment analysis and social media monitoring.

There are no results matching your search.

ResetThere are no results matching your search.

Reset